Tuesday marked the debut of Vietnam-based electric vehicle (EV) maker Vinfast Auto Ltd. (NASDAQ: VFS) on the U.S. equity market. The company came public in a SPAC merger with Black Spade Acquisition and is now the largest Vietnamese company listed in the United States.

Not only that, its market cap at the close of the first day of trading exceeds that of legacy U.S. automakers Ford Motor Co. (NYSE: F), General Motors Co. (NYSE: GM) and Stellantis N.V. (NYSE: STLA).

When the opening bell rang Tuesday, VinFast stock was offered at $22.00 per share, more than double Black Spade’s Monday closing price of $10.45. By the time the closing bell rang, shares were trading at $37.06.

According to the company’s prospectus, Vietnamese billionaire Pham Nhat Vuong owns 99% of VinFast, or 2.3 billion shares. The IPO included 6.97 million ordinary shares and 14.83 million warrants accounting for another 14.83 million shares when the warrants are exercised.

The math says VinFast is worth approximately $85.24 billion. Ford’s market cap at Monday’s closing bell was $47.93 billion, GM’s was $46.29 billion and Stellantis had a market cap of $56.45 billion. China’s BYD Company (BYDDF) had a market cap of $88.61 billion when markets closed Monday.

But if you had run the numbers through OpenAI’s ChatGPT-4, you might have come up with different results. In a recent paper, Faith and Fate: Limits of Transformers on Compositionality, a group of U.S.-based researchers reported that Transformer-based large language models (LLMs) are not all that accurate when it comes to relatively simple math. (A Transformer is a neural network that learns context and meaning by tracking relationships in sequential data. Transformers are common in generative AI applications.)

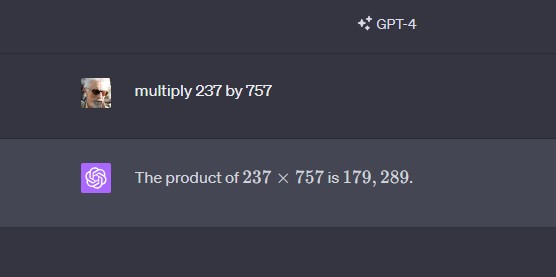

Try it yourself. Use your calculator and perform this multiplication: 237 × 757. The answer is 179,409. Now, type this into ChatGPT-4:

The Faith and Fate researchers looked at two propositions: first, Transformer LLMs “solve computational tasks by reducing multi-step compositional reasoning into linearized path matching,” and second, “due to error propagation, Transformers may have inherent limitations on solving high-complexity compositional tasks that exhibit novel patterns.”

Here is their conclusion:

Our empirical findings suggest that Transformers solve compositional tasks by reducing multi-step compositional reasoning into linearized subgraph matching, without necessarily developing systematic problem solving skills. To round off our empirical study, we provide theoretical arguments on abstract multi-step reasoning problems that highlight how Transformers’ performance will rapidly decay with increased task complexity.

How bad is it? According to the research, ChatGPT-4 will correctly perform a three-by-three multiplication problem like the one in the illustration just 59% of the time. And it gets worse.

For a four-by-four multiplication, ChatGPT-4 will get the correct answer just 4% of the time. For a five-by-five, it never gets a correct answer.

By the way, the correct answers are 2,365 × 4,897 = 11,581,405 and 3,4456 × 2,8793 = 992,091,608.

ALERT: Take This Retirement Quiz Now (Sponsored)

Take the quiz below to get matched with a financial advisor today.

Each advisor has been vetted by SmartAsset and is held to a fiduciary standard to act in your best interests.

Here’s how it works:

1. Answer SmartAsset advisor match quiz

2. Review your pre-screened matches at your leisure. Check out the advisors’ profiles.

3. Speak with advisors at no cost to you. Have an introductory call on the phone or introduction in person and choose whom to work with in the future

Take the retirement quiz right here.

Thank you for reading! Have some feedback for us?

Contact the 24/7 Wall St. editorial team.